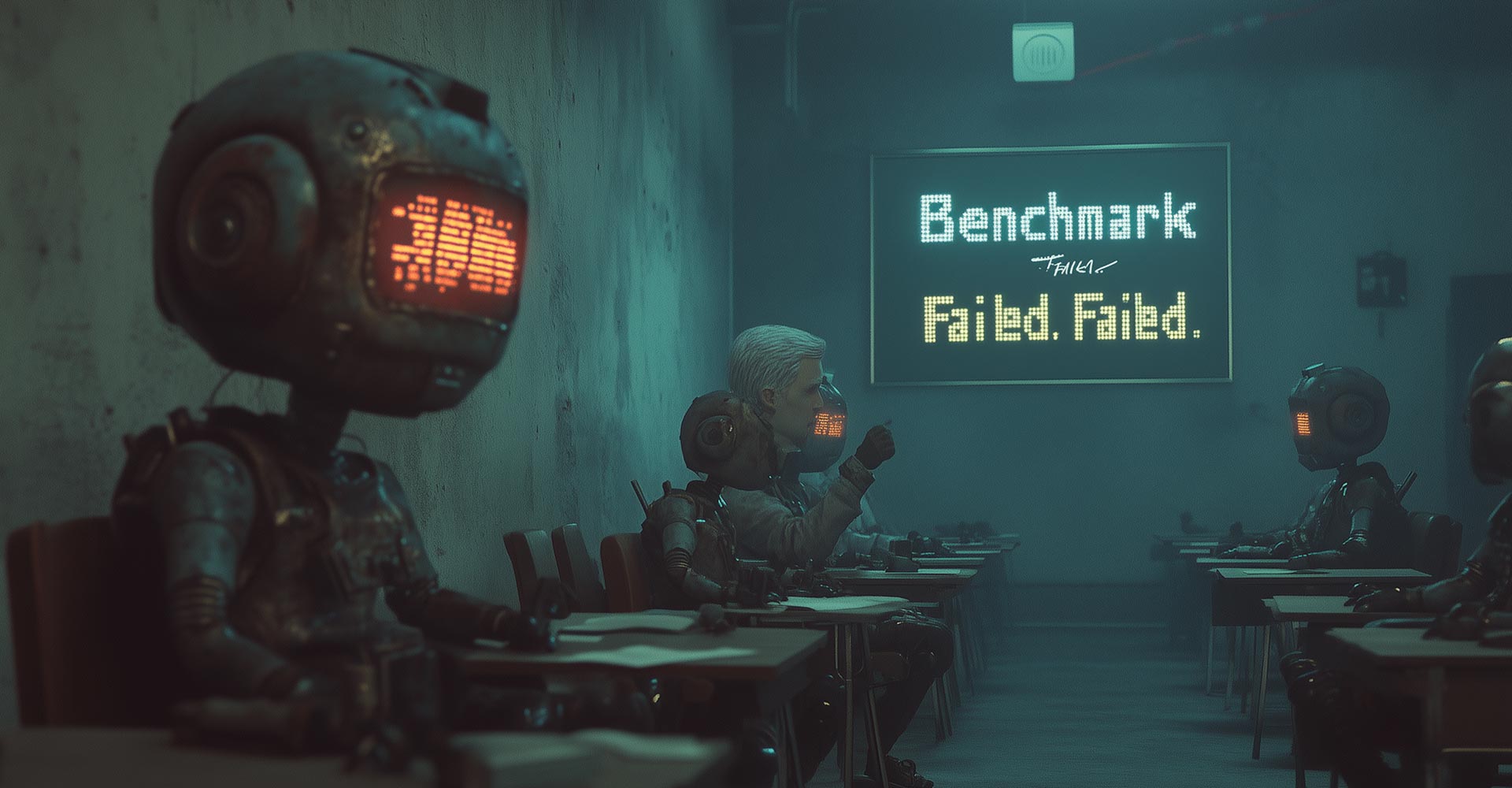

Imagine sitting an exam so brutal, most humans would struggle to pass—and then handing it to a machine. That’s exactly what Humanity’s Last Exam (HLE) does. It’s not the sort of test where you can bluff your way through with a cheeky grin and a good guess. No, this one dives deep—really deep—into the murky waters of expert-level reasoning, logic, and nuance. And guess what? Even our cleverest AI friends are flunking it.

Why We Needed a New Exam in the First Place

We’ve reached a strange point in AI where models like GPT-4, Claude, and Gemini breeze through most existing benchmarks with top marks. That’s not because they’ve hit enlightenment—it’s because the tests are simply too easy. When AI hits 90%+ on your quiz, you’re not measuring growth anymore; you’re measuring memory.

Enter HLE: an ultra-challenging benchmark built by nearly 1,000 global experts from over 500 institutions. It’s designed to challenge AI’s ability to reason, not just regurgitate. This exam spans over 100 academic fields, with maths making up 41% (which explains why your humanities-loving chatbot might start to sweat).

AI’s Current Performance: Room for… Growth

If HLE were a pub quiz, AI would be the mate who turns up late, forgets his wallet, and gets 2 questions right out of 50. Most models are hovering below 10% accuracy, with a few overachievers like OpenAI’s Deep Research brushing against the lofty heights of 26.6%. That might not sound like much, but in this context, it’s a roaring success.

But—and it’s a big but—many of these improvements seem a bit like AI is studying the exam rather than understanding the subject. It’s the classic Goodhart’s Law: when a benchmark becomes a target, it stops being a good benchmark.

So, What Does a High Score Mean?

A decent HLE score doesn’t crown an AI as the new Einstein. It means the AI can work through tough, structured, expert-level problems. That’s important. But it’s not AGI. Real general intelligence needs more than book smarts—it needs common sense, creativity, adaptability, and a fair bit of emotional finesse. (Spoiler: AI still can’t tell a joke properly. Yet.)

The Catch: We’re Chasing Our Own Tail

The second AI gets good at HLE, we’ll need HLE 2.0. Then 3.0. It’s a never-ending arms race between human ingenuity and silicon cunning. Some say the name “Humanity’s Last Exam” is a bit dramatic—and maybe it is—but it raises a haunting prospect: what happens when we’re no longer the ones writing the tests?

The Takeaway: HLE Isn’t the End, It’s the Beginning

HLE is a brutal wake-up call. It shows just how far AI still has to go before it can truly understand, interpret, and create like a human. But it also lights the path forward, encouraging researchers to build AI that’s not just smart, but wise.

And here’s the kicker: we don’t even know how well humans score on it. The questions are so tough, even most college students wouldn’t understand them. Specialists might ace bits of it, but no one human could realistically master the whole thing. In many ways, it’s as much a test of our own intellectual limits as it is of AI’s. If anything, HLE reveals we’re benchmarking AI not against average intelligence—but the sharpest edges of expert knowledge. And that’s a high bar.

So next time someone says “AI is coming for our jobs,” ask them if it can pass Humanity’s Last Exam. If not, your job’s probably safe—for now.